![Twitter Icon]() Our last #vSphereChat on Twitter was truly something special — not only did we accomplish hosting a tweet chat across THREE different VMware Twitter accounts, but also, we discussed our latest launches – #vSphere7, #vSAN7 and #CloudFoundation4. The transcript is below.

Our last #vSphereChat on Twitter was truly something special — not only did we accomplish hosting a tweet chat across THREE different VMware Twitter accounts, but also, we discussed our latest launches – #vSphere7, #vSAN7 and #CloudFoundation4. The transcript is below.

There will be another Tweetchat coming up on April 30, 2020 on vSAN at 11:00 AM Pacific. Have questions or thoughts on hyperconverged storage? Bring them! Just follow the #vSANchat hashtag in Twitter.

For the March 26th tweetchat vSphere expert Bob Plankers was joined by members of the vSAN team, John Nicholson and Pete Flecha, as well as Josh Townsend and Rick Walsworth from the Cloud Foundation team. In case you missed it, we’re recapping the vSphere event below.

Bob Plankers

A1: That’s a loaded question. It might be easier to say what it doesn’t do at this point. vSphere 7 is the core of the virtual data center and brings all sorts of security and availability and performance features with it to make it easy to run workloads.

A1 Part 2: I was talking to someone this morning about how vSphere 7 helps us reduce risk in our environments. Features like vMotion and DRS make the infrastructure self-healing and flexible. VM Encryption helps us be secure with whatever equipment we have.

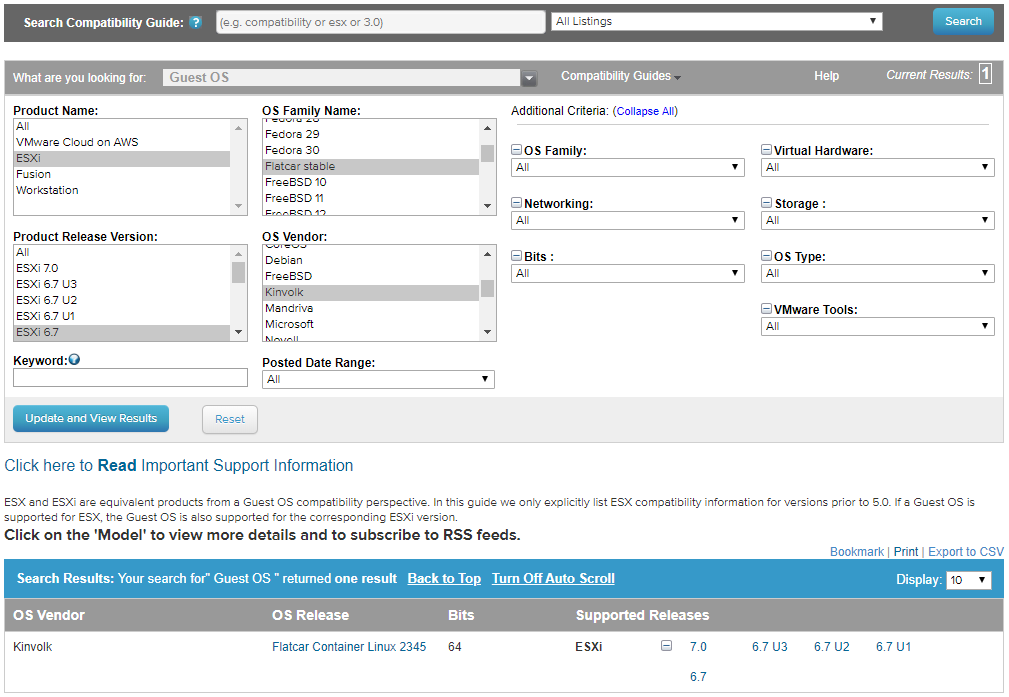

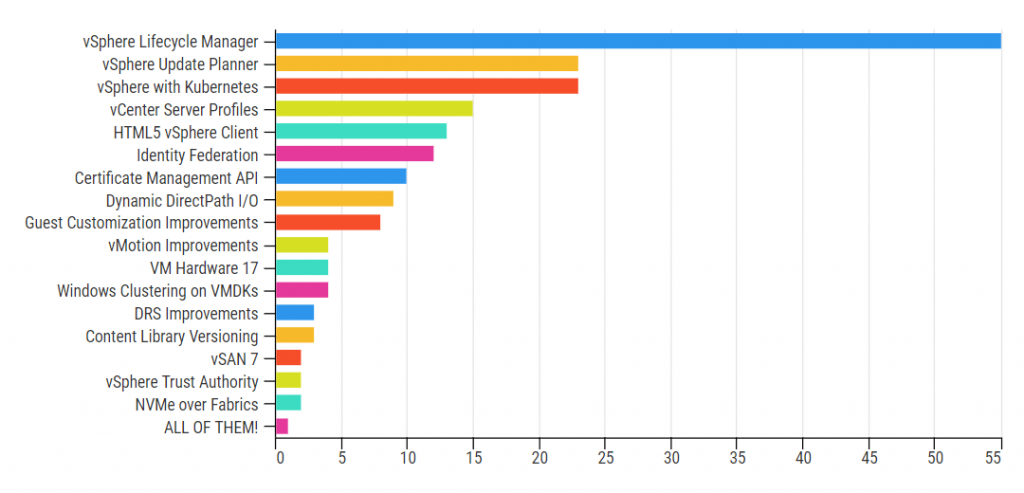

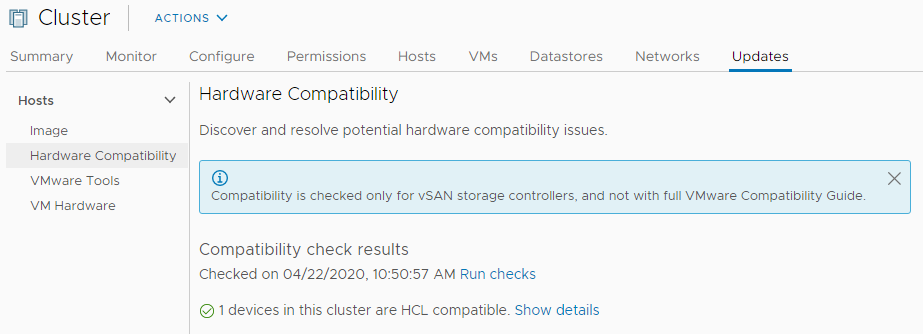

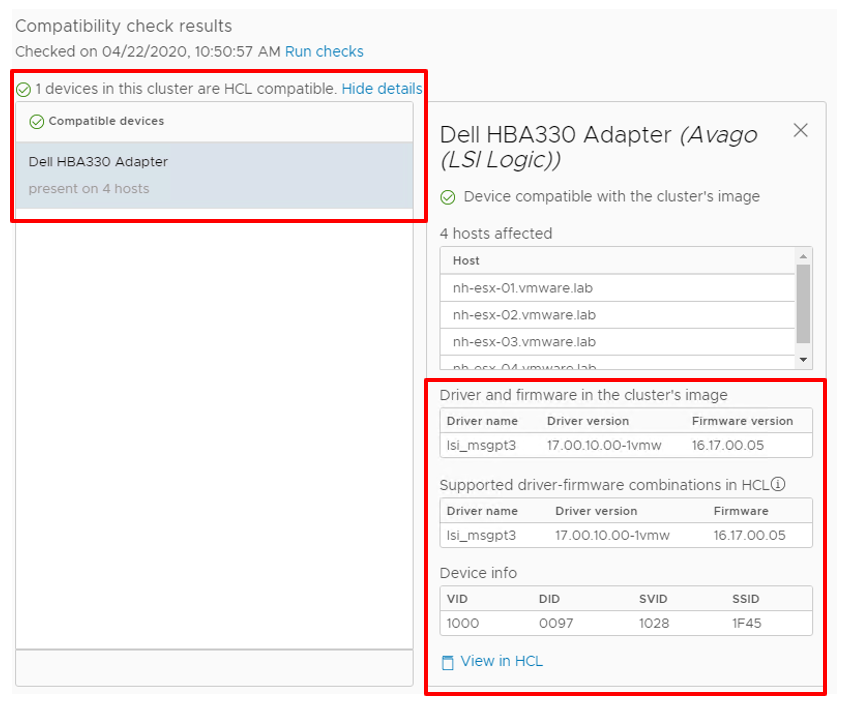

A1 Part 3: The new Lifecycle Manager features in vSphere 7 are going to be pretty nice, too. Firmware management added to vSphere patching… integrated HCL checking… integrated compatibility matrix checking… lots of manual work by admins now eliminated.

Bob Plankers

A2 Part 1: In vSphere 6.7 and earlier we had Update Manager. Now in vSphere 7, we get Lifecycle Manager, which also cares about hardware firmware and other products. So, for instance, we can make sure that our Dell PowerEdge R640s get the right disk controller firmware.

A2 Part 2: Firmware is really important to vSAN 7 and the vSAN Chat folks as well. Disks, controllers, all of that need to be running versions that are safe and perform well.

A2 Part 3: We care about other solutions, too. Have @vRealizeOps installed? vSphere 7 Lifecycle Manager will check to make sure it’s the right level for a vSphere upgrade.

A2 Part 4: We have lots of info on vSphere 7 Lifecycle going up on our blog. Subscribe, we have blogs published every Monday – Thursday until May. It’s nuts!

Bob Plankers

A3: We use “intrinsic security” a lot. It means that we consider security as part of our product development, and not just an afterthought once it’s built. When you do that you get better, more comprehensive security that’s easier to operate and use.

A3 Part 2: It also means that our security tools, such as @vmw_carbonblack, integrate well with vSphere 7 and form a holistic solution. The goal is to make security easy to enable and operate.

A3 Part 3: At the vSphere 7 level we do a LOT of hardening of the hypervisor and tools, so when you install them you get the best practices right out of the proverbial box.

A3 Part 4: The folks in our @vmwarevcf & VMware Cloud Foundation chat also have really great guides to hardening and compliance in the VMware Validated Designs, here, which covers PCI DSS 3.2.1 and NIST 800-53v4 right now.

A3 Part 5: There are also all the features like VM Encryption, vTPM, and so on. Easy to enable, easy to use. In fact, vSphere 7 (and 6.7) are the only hypervisors to natively support Microsoft Device Guard and Credential Guard. It makes it easy to be secure and in compliance!

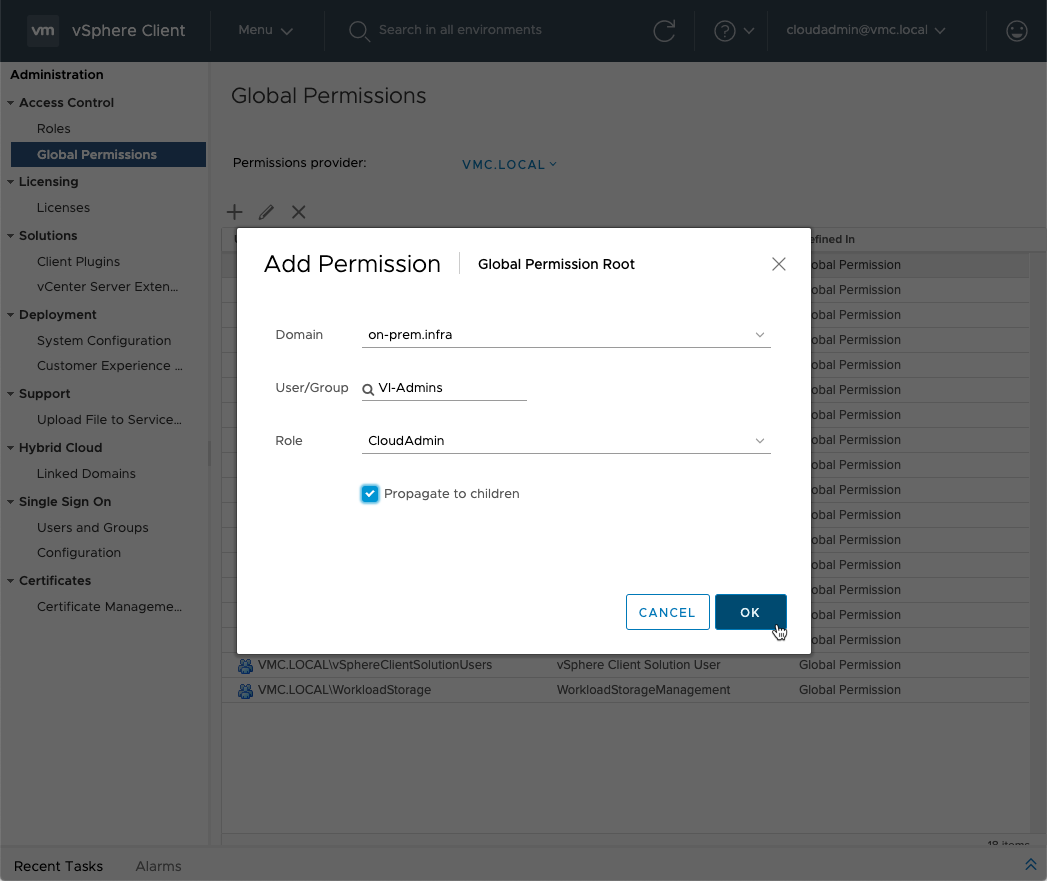

A3 Part 6: Also keep an eye on Identity Federation!

Bob Plankers

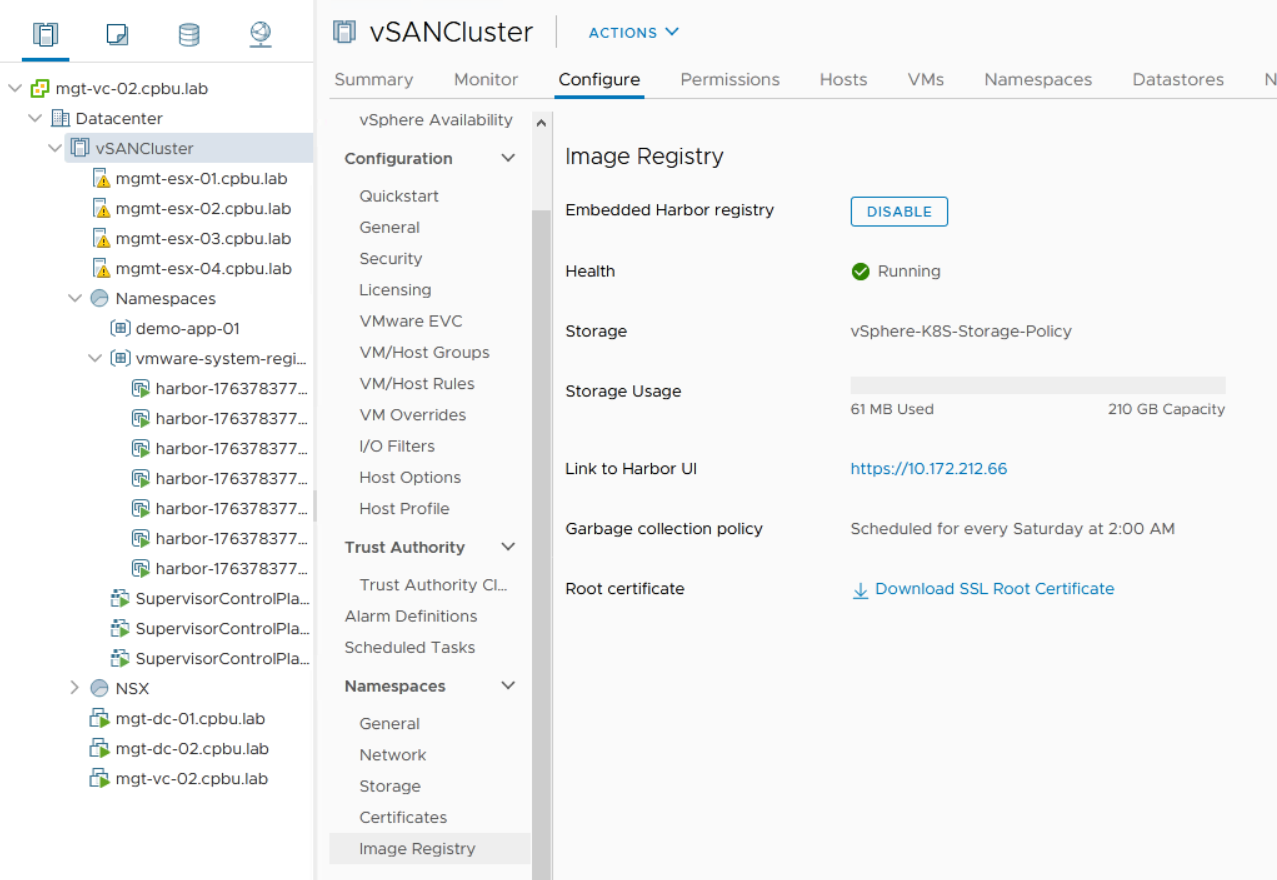

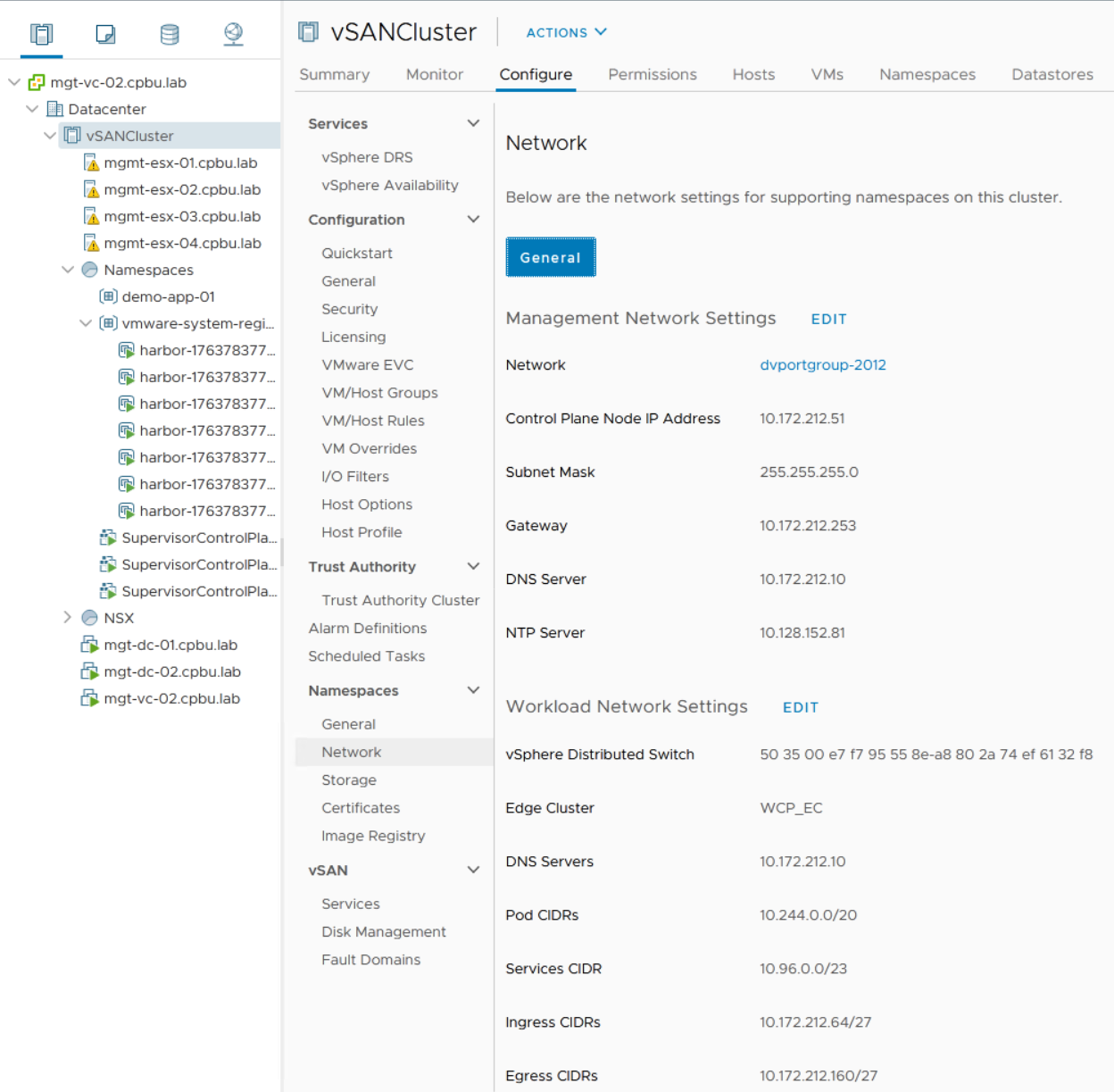

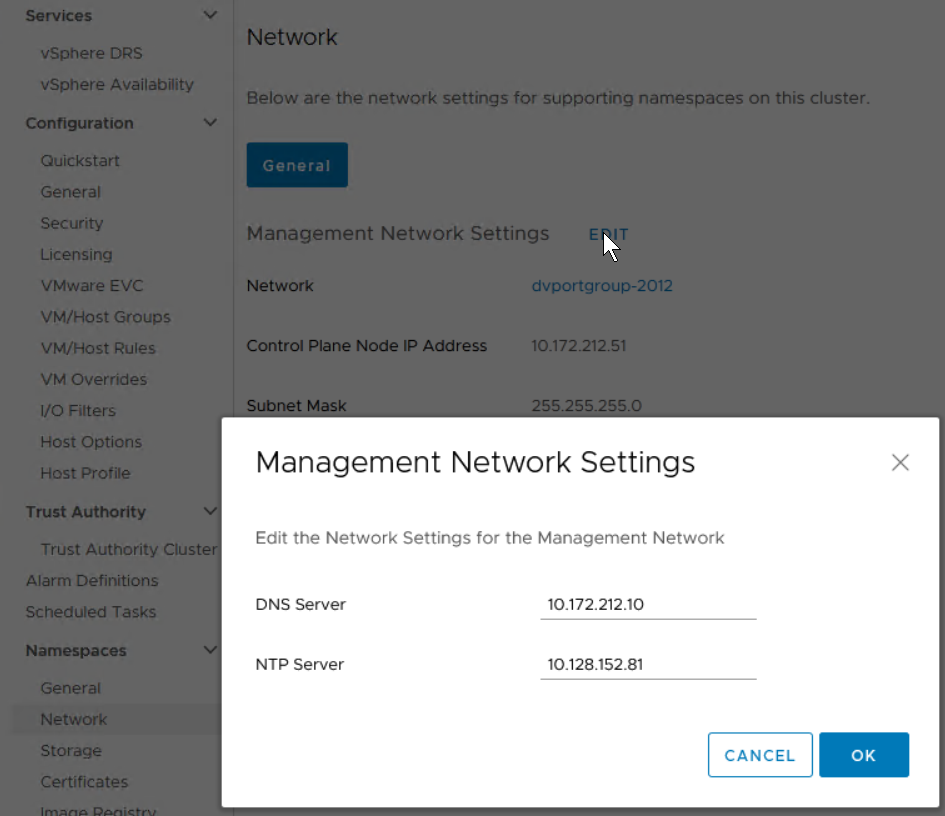

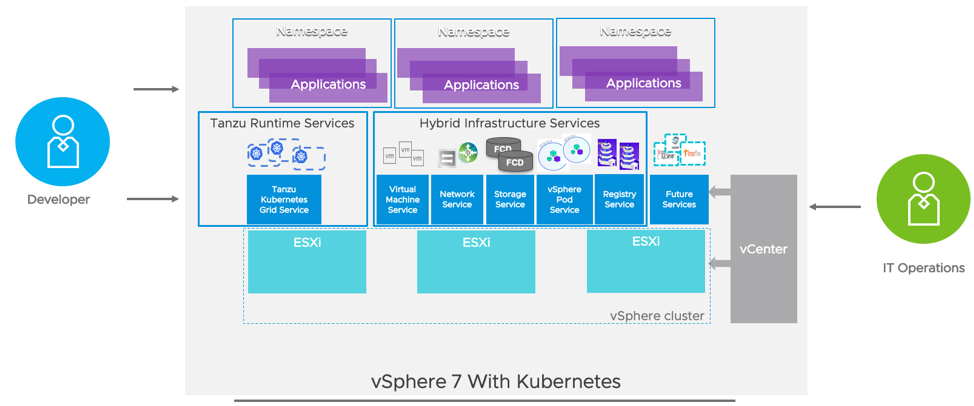

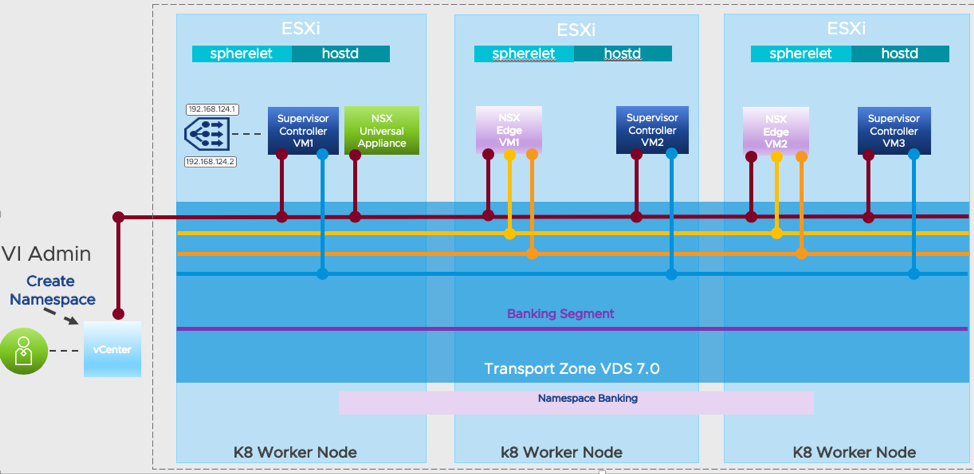

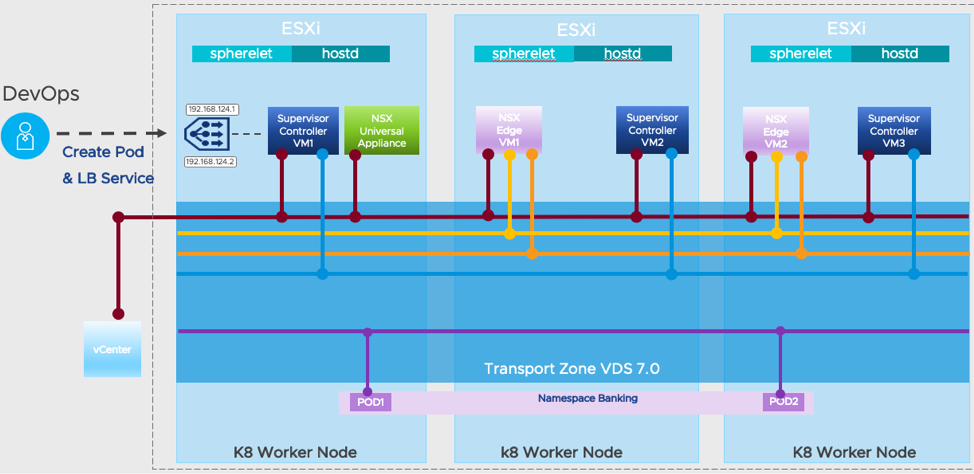

A4: vSphere 7 with Kubernetes is going to go a long way towards this. A couple of good resources to start with can be found here and here.

A4 Part 2: Namespaces is basically a really killer version of the vApp concept, with developer self-service in it.

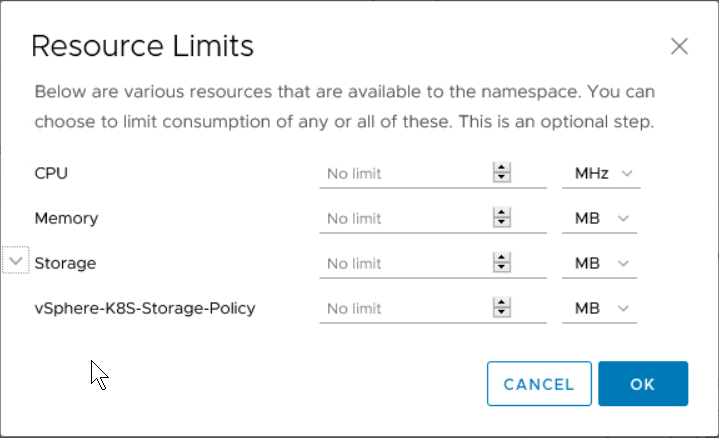

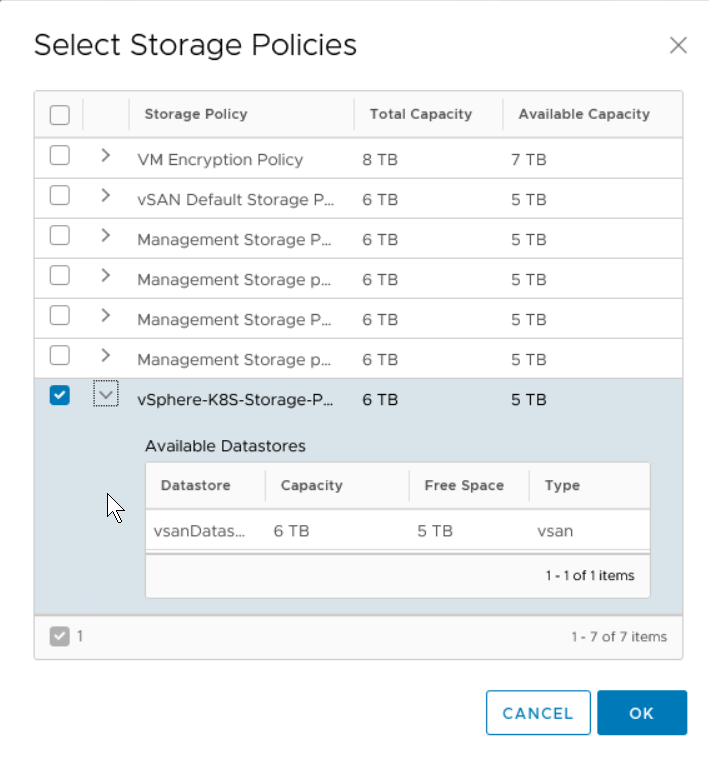

A4 Part 3: vSphere 7 Namespaces lets a vSphere Admin set resource limits and “guard rails” but then developers can do their own thing without having to open tickets all. the. time. GLORIOUS for everyone involved.

Bob Plankers

A5: vSphere with Kubernetes is what Pacific turned into. Options for Kubernetes clusters are vSphere Pod Service clusters or Tanzu Kubernetes Grid clusters. @mikefoley posted this just this morning!

A5 Part 2: Everything vSphere is and has been is all still there, but we also add containers both as a native object in ESXi and the Tanzu Kubernetes Grid (TKG) clusters.

AND…

A5 Part 3: You can use the vSphere Client to do admin tasks, like always, or PowerCLI, or whatever tool you want. Still works. But you can also ask Kubernetes to do it, too. Mix & match infrastructure all you want in your YAML files. Flexible, nice APIs, all in Namespaces.

A5 Part 4: And because it’s still just vSphere 7 (just!) under the hood, it already works with all your corporate governance and processes and such. Problem solved, let’s people focus on productive things, not red tape.

Bob Plankers

A6: ALL THE CASES.

No, really. It’s very flexible, scales from very small (Mac Mini) to very big (dozens of CPU sockets and cores). Networking is very flexible and fast. And with vSphere 7’s focus on improving vMotion and DRS for big workloads, there’s…

A6 Part 2: …very little reason now to NOT run something in vSphere 7. We just need to keep working on the apps people that doubt it and tell us their apps cannot be virtualized. That’s absolutely not true, and a disservice to their organizations.

A6 Part 3: Same for vSAN Chat and vSAN 7, too. A lot of the issues with adopting new releases like #vsphere7 come down to people, and their fears. “If we automate something what will I do for my job?” — turns out there’s always something to do!

Bob Plankers

A7: We should get the @vmwarevcf folks in this, too, @RickWalsworth. In short, it brings K8s functions into an enterprise in a very short order. Want to get developers going with modern apps quickly? VCF is a GREAT way to do that.

Rick Walsworth

A7: Yes, there are add-on SKUs so that existing vSphere customers can add on the remaining NSX, vSAN, vRealize, and SDDC components to complete up the full stack. Go here for more info.

Bob Plankers

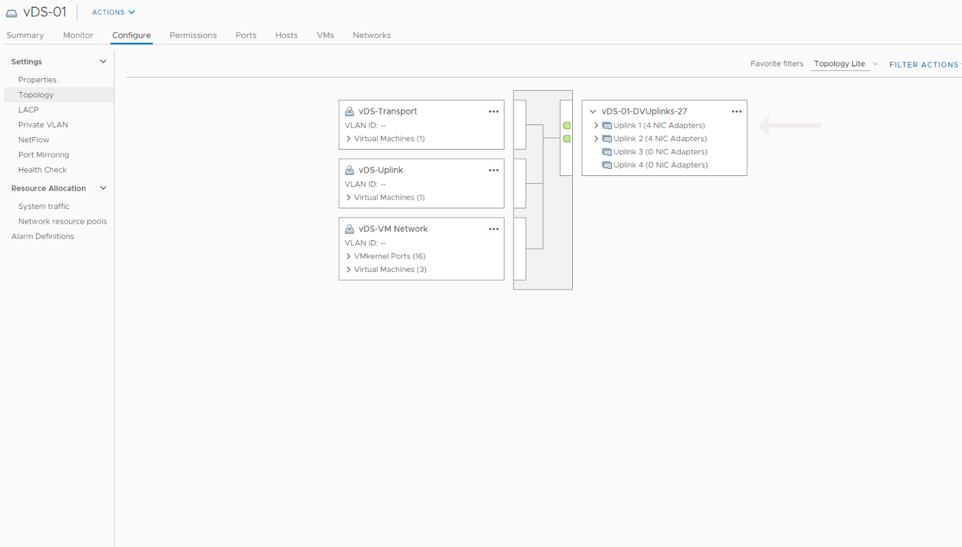

A8: VMware Cloud Foundation is very flexible about storage and infrastructure. You can use the storage you have for workloads, but for the VCF management cluster, it relies on vSAN 7. It’s pretty amazing and eliminates a TON of admin overhead.

Rick Walsworth

A8: The ability to resize workload domains on the fly provides the ability to allocate capacity on demand so that you can allocate storage and network resources easily without having to deploy new infrastructure.

Bob Plankers

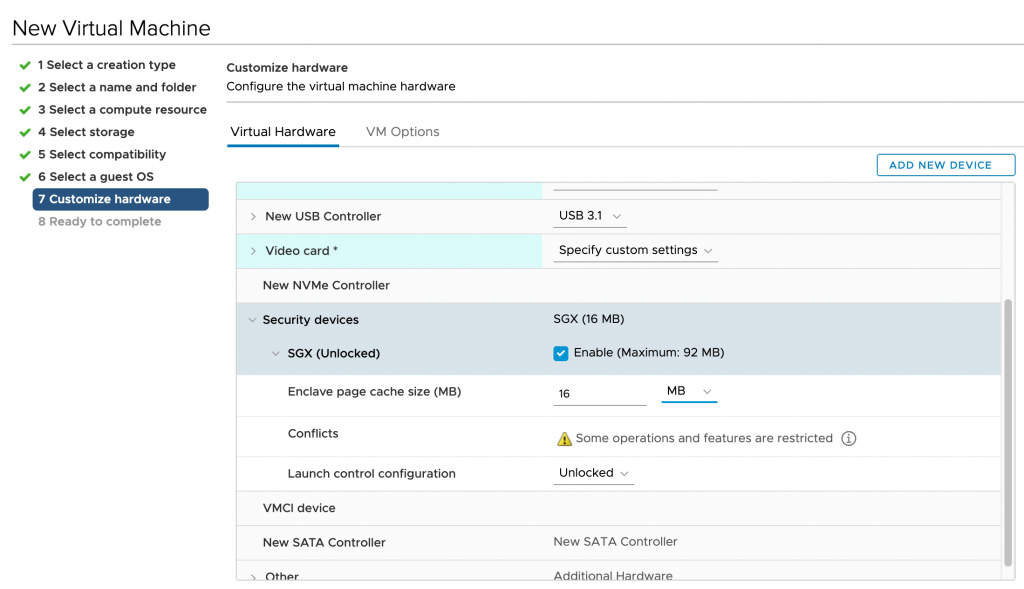

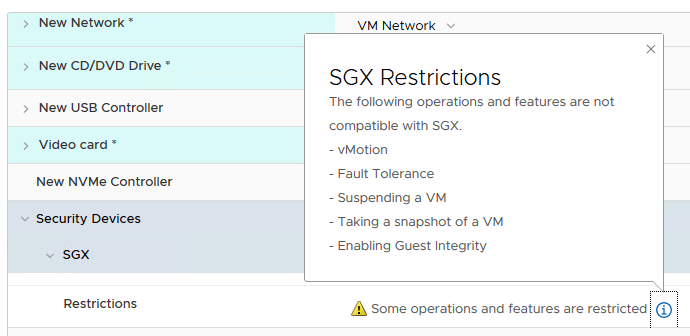

A9: VMware and vSphere are really focusing on simplifying things, making things easy to use, making things fast. DRS improvements, vMotion improvements, Lifecycle, Identity Federation, Precision Clocks, vSGX, all sorts of things.

A9 Part 2: Check out our blogs on all the new things. And join us on April 2 for a vSphere Admin launch event!

Bob Plankers

A10: This is going to sound bad, but Thing from The Fantastic Four. SOLID, STABLE, GREAT LOOKING!

Narrator’s Voice: At this point, having said “Thing,” Bob was asked to stop tweeting. ![🙂]()

Follow the additional VMware experts who participated in this, vSAN, and Cloud Foundation chats, including John Nicholson (@Lost_Signal), Pete Flecha (@vPedroArrow), Josh Townsend (@joshuatownsend) and Rick Walsworth (@RickWalsworth).

We are excited about vSphere 7 and what it means for our customers and the future. Watch the vSphere 7 Launch Event replay, an event designed for vSphere Admins, hosted by theCUBE. We will continue posting new technical and product information about vSphere 7 and vSphere with Kubernetes Monday through Thursdays into May 2020. Join us by following the blog directly using the RSS feed, on Facebook, and on Twitter. Thank you, and please stay safe.

The post #vSphere7 Launch TweetChat with #vSAN7 & #CloudFoundation4 appeared first on VMware vSphere Blog.

![]() (By Michael West, Technical Product Manager, VMware)

(By Michael West, Technical Product Manager, VMware)